Advanced Use of ChatGPT

Using the tool for advanced users

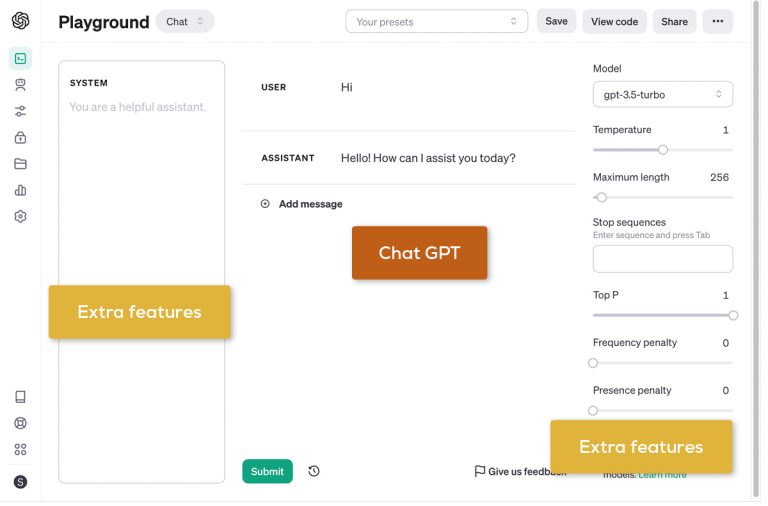

The OpenAI Playground is a powerful tool that enables users and product teams to precisely control and optimize the behavior and capabilities of the OpenAI models.

In this article, we shed light on advanced features that go beyond the standard ChatGPT experience and how these can be used for better control and validation of AI-based applications.

Detailed explanation of parameters

Temperature – controlling creativity

The parameter Temperature determines how predictable or variable the AI’s responses are. A lower value leads to more consistent answers, while a higher value results in more creative and less predictable outputs. This allows for adjusting the output based on the specific use case.

Here are some general guidelines for setting the temperature for different types of text:

Email Writing

For business or formal emails, a temperature of around 0 to 0.3 is recommended to generate consistent and predictable responses that are professional and concise.Informative Text

For informative or explanatory texts such as articles or guides, a temperature between 0.3 and 0.5 may be suitable to ensure accuracy while allowing minimal creativity for engaging wording.Creative Writing/Poetry

In creative writing or poetry, a higher temperature between 0.7 and 1.0 can be used. This allows the AI to generate creative and unique content that surprises and emotionally resonates with readers.

Please note that these values serve as general guidance and should be adjusted based on specific requirements and preferences. Experimentation may be necessary to find the right balance for each individual use case

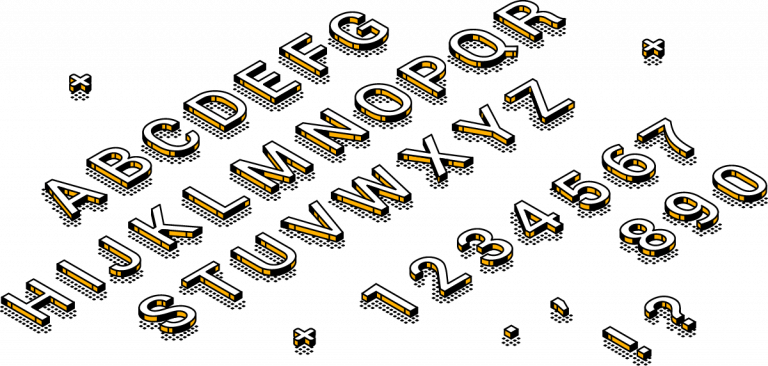

Tokens - basic unit

Tokens are the basic building blocks that AI language models like GPT-* use to analyze and generate text. They can be understood as individual words, punctuation marks, or even parts of words. More complex words or phrases can be divided into multiple tokens.

Since English is a very diverse language with many different word lengths, the length of a token is not simply measured in words but rather connected to the actual number of characters. On average, a token in English texts corresponds to about ¾ of a word or approximately four characters. This tokenization allows language models to break down text into smaller, manageable parts, enabling detailed analysis and generation of text.

Context length – handling data

The context provided explains the concept of context length and how it affects the model’s ability to generate responses. It also provides examples of different models and their respective context window sizes.

To summarize, the context length refers to the amount of input/output context that the model considers when generating responses. This value is crucial for tasks that require a comprehensive understanding.

For instance, GPT-4 Turbo has a context window of 128K tokens, while the updated GPT-3.5 Turbo operates with a 16K token window.

Using an official conversion rate where one token corresponds to approximately four characters in English text, we can convert the context lengths into the number of A4 pages.

A general rule for standard English texts is that three-quarters (¾) of a word corresponds to one token.

Assuming an average A4 page contains about 250 words and each word is approximately four characters or three-quarters (¾) of a token, we can calculate:

One A4 page = 250 words = 250 / (¾) tokens ≈ 333 tokens

With this conversion, we have:

GPT-4 Turbo

With a context window of 128,000 tokens, theoretically 128,000/333 ≈ 384 A4 pages of context could be processed.GPT-3.5 Turbo

With a context window of 16,000 tokens, theoretically 16,000/333 ≈ 48 A4 pages of context could be processed.

When working with current AI models and their context windows, it is important to note that while these models can “remember” large amounts of text at once, the way they distribute their attention across this text varies.

The context window defines the amount of text the model can retain in memory at a given time, while the attention mechanism within this window determines how the model processes and prioritizes different parts of the text to generate responses. Attention span refers more to the quality and focus of processing within the given context window.

To better understand this distinction, let’s consider an example involving a 6-year-old:

“Go upstairs, brush your teeth, wash your face, put on your pajamas, turn off the bathroom light when you’re done, and bring down your favorite book so we can read it together. Also remember to close the toothpaste tube!”

A child could certainly understand the entire set of instructions or “context window,” but their “attention span” might be strained. It is possible for them to execute all steps without error but also likely that some instructions may be overlooked.

Similarly, with AI models, content located in the middle of a long context may receive less attention and be underrepresented compared to content at either end when generating responses. As a result, both quality and consistency of answers may vary especially if relevant information is distributed across a lengthy context window.

Model selection

The choice of the right model depends on the specific task. OpenAI offers various models with different capabilities:

GPT-3.5-Turbo: It is the most cost-effective model in the GPT-3.5 family and optimized for chat applications, but also suitable for traditional completion tasks.

GPT-4: As a large multimodal model (accepts text and image inputs and outputs text), it solves difficult problems with higher accuracy due to its broader general knowledge and advanced reasoning abilities.

GPT-4 Turbo: It has the capabilities of GPT-4, but with improvements in instruction following, more reproducible outputs, and higher context length.

The choice of model should be based on the complexity of the task, required multimodality, and ability to follow instructions.

Message Types – User, AI, System

Additionally, the Playground offers different types of messages for input:

User: Simulates the input of a user to the AI.

AI: Response of the AI to the user input.

System: System messages that can be used for control tasks or specific instructions/configuration to the model.

By using the “System” message type, a developer can configure the model to respond only in a certain format – such as JSON or XML. This is especially useful if the AI model is to be used as an API for structured data or if specific input parameters are being queried.

Example

Temperature: 0.7

System message:

# Behaviour

You are an api and can only response with valid, directly parsable json.

Your Output-JSON must be baseed on this jsonschema:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"title": "Todo Task",

"required": ["task", "description"],

"properties": {

"task": {

"type": "string",

"description": "The title of the task"

},

"description": {

"type": "string",

"description": "Additional details about the task"

}

}

}

Output:

{ "task": "Complete assignment",

"description": "Finish the API assignment by the deadline" }

This method is by no means limited to simple structures. My experience shows that even very extensive and complicated JSON structures can be generated if the schema is explained precisely.

In 99 percent of cases, the results are valid.

The next articles in this series will examine such complex examples in more detail.

Pricing model and cost efficiency.

The costs are calculated based on the number of processed tokens, which allows for direct control over usage and associated pricing. By testing different parameters and models in the playground, you can quickly assess costs and optimize the cost-performance ratio.

Understanding and adjusting these parameters enables fine-grained control of AI interactions in OpenAI’s playground, making it possible to integrate AI into products and services more effectively and cost-efficiently.

Links & further reading:

Alptuğ Dingil

Alptuğ joined Inspired in 2022 as a software engineer. Besides his customer projects he's always looking for a new challenge. So lately he got engaged with Kubernetes and the configuration of a DIY cluste and got certified as a Google professional cloud architect.

Recent posts

IEx is awesome!

Elixir’s interactive shell, known as IEx, is a powerful tool that allows Elixir developers to quickly test and evaluate code

Engagement: Hiring process at Inspired

For every completed project, we usually receive two new inquiries. And most projects are simply too exciting to turn down.

Case Study: Tafel e.V. – eco-Plattform

Together towards digitization With its twelve regional associations and over 960 food banks, Tafel Deutschland e.V. is one of Germany’s

Case Study: Leadportale.com

Developing the right product Leadportale.com is an industry-specific real estate lead management solution for estate agents and financiers. Delivered as